- Word Processing Cheat Sheet

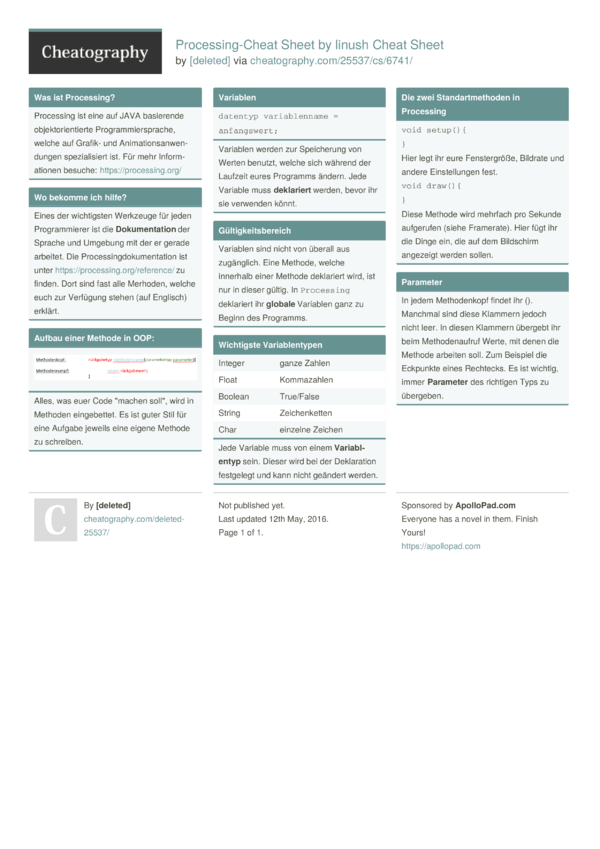

- Processing Cheat Sheet

- Signal Processing Cheat Sheet

- Signal Processing For Dummies

- Loan Processing Cheat Sheet

- Sterile Processing Cheat Sheet

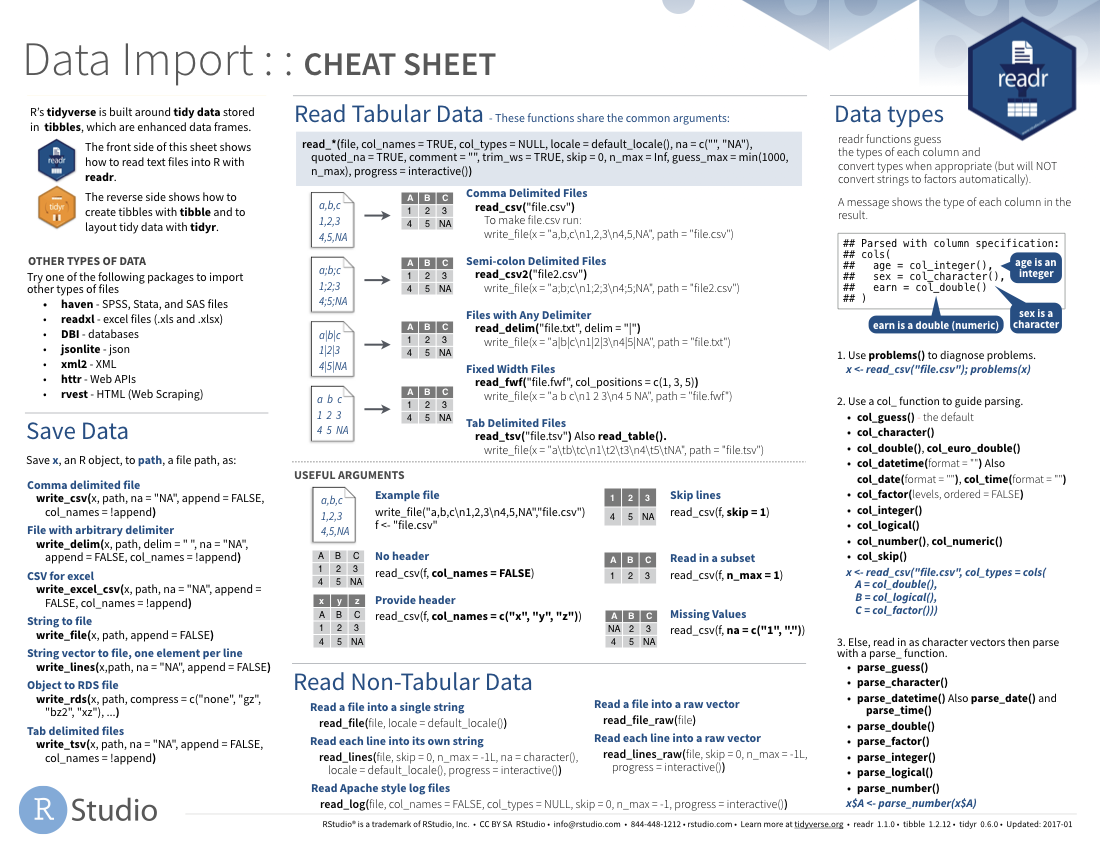

Title: Processing Cheatsheet Created Date: 3/13/2017 7:21:57 PM. W2 Processing Cheat Sheet 2010 Page 4 Employee Record Changes - Name o Fix in Employee or Employee Pay Management - Address o Fix in Employee or Employee Pay Management o Only enter a mailing address (3- Mailing Address/Emergency/Spouse section) if employee has a different physical and mailing address.

Have you reached the limit of what a relational database can achieve? Self-diagnose with these 5 telltale signs you’ve outgrown your data warehouse.

The digital age has given enterprises a wealth of new options to measure their operations. Gone are the days of manually updating database tables: today’s digital systems are able to capture the minutiae of user interactions with apps, connected devices and digital systems.

With digital data being generated at a tremendous pace, developers and analysts have a broad range of possibilities when it comes to operationalizing data and preparing it for analytics and machine learning.

One of the fundamental questions you need to ask when planning out your data architecture is the question of batch vs stream processing: do you process data as it arrives, in real time or near-real time, or do you wait for data to accumulate before running your ETL job? This guide is meant to give you a high-level overview of the considerations you should take into account when making that decision. We’ve also included some additional resources if you want to dive deeper.

Batch Processing

What is batch processing?

In batch processing, we wait for a certain amount of raw data to “pile up” before running an ETL job. Typically this means data is between an hour to a few days old before it is made available for analysis. Batch ETL jobs will typically be run on a set schedule (e.g. every 24 hours), or in some cases once the amount of data reaches a certain threshold.

When to use batch processing?

By definition, batch processing entails latencies between the time data appears in the storage layer and the time it is available in analytics or reporting tools. However, this is not necessarily a major issue, and we might choose to accept these latencies because we prefer working with batch processing frameworks.

For example, if we’re trying to analyze the correlation between SaaS license renewals and customer support tickets, we might want to join a table from our CRM with one from our ticketing system. If that join happens once a day rather than the second a ticket is resolved, it probably won’t make much of a difference.

To generalize, you should lean towards batch processing when:

- Data freshness is not a mission-critical issue

- You are working with large datasets and are running a complex algorithm that

- requires access to the entire batch – e.g., sorting the entire dataset

- You get access to the data in batches rather than in streams

- When you are joining tables in relational databases

Batch processing tools and frameworks

- Open-source Hadoop frameworks for such as Spark and MapReduce are a popular choice for big data processing

- For smaller datasets and application data, you might use batch ETL tools such as Informatica and Alteryx

- Relational databases such as Amazon Redshift and Google BigQuery

Need inspiration on how to build your big data architecture? Check out these data lake examples. Want to simplify ETL pipelines with a single self-service platform for batch and stream processing? Try Upsolver today.

Stream Processing

What is stream processing?

In stream processing, we process data as soon as it arrives in the storage layer – which would often also be very close to the time it was generated (although this would not always be the case). This would typically be in sub-second timeframes, so that for the end user the processing happens in real-time. These operations would typically not be stateful, or would only be able to store a ‘small’ state, so would usually involve a relatively simple transformation or calculation.

When to use stream processing

While stream processing and real-time processing are not necessarily synonymous, we would use stream processing when we need to analyze or serve data as close as possible to when we get hold of it.

Examples of scenarios where data freshness is super-important could include real-time advertising, online inference in machine learning, or fraud detection. In these cases we have data-driven systems that need to make a split-second decision: which ad to serve? Do we approve this transaction? We would use stream processing to quickly access the data, perform our calculations and reach a result.

Indications that stream processing is the right approach:

- Data is being generated in a continuous stream and arriving at high velocity

- Sub-second latency is crucial

Stream processing tools and frameworks

Stream processing and micro-batch processing are often used synonymously, and frameworks such as Spark Streaming would actually process data in micro-batches. However, there are some pure-play stream processing tools such as Confluent’s KSQL, which processes data directly in a Kafka stream, as well as Apache Flink and Apache Flume.

Micro-batch Processing

What is micro-batch processing?

In micro-batch processing, we run batch processes on much smaller accumulations of data – typically less than a minute’s worth of data. This means data is available in near real-time. In practice, there is little difference between micro-batching and stream processing, and the terms would often be used interchangeably in data architecture descriptions and software platform descriptions.

When to use micro-batch processing

Microbatch processing is useful when we need very fresh data, but not necessarily real-time – meaning we can’t wait an hour or a day for a batch processing to run, but we also don’t need to know what happened in the last few seconds.

Example scenarios could include web analytics (clickstream) or user behavior. If a large ecommerce site makes a major change to its user interface, analysts would want to know how this affected purchasing behavior almost immediately because a drop in conversion rates could translate into significant revenue losses. However, while a day’s delay is definitely too long in this case, a minute’s delay should not be an issue – making micro-batch processing a good choice.

Micro-batch processing tools and frameworks

- Apache Spark Streaming the most popular open-source framework for micro-batch processing.

- Vertica offers support for microbatches.

Additional resources and further reading

The above are general guidelines for determining when to use batch vs stream processing. However, each of these topics warrants much further research in its own right. To delve deeper into data processing techniques, you might want to check out a few of the following resources:

Upsolver: Streaming-first ETL Platform

One of the major challenges when working with big data streams is the need to orchestrate multiple systems for batch and stream processing, which often leads to complex tech stacks that are difficult to maintain and manage.

Whether you’re just building out your big data architecture or are looking to optimize ETL flows, Upsolver provides a comprehensive self-service platform that combines batch, micro-batch and stream processing and enables developers and analysts to easily combine streaming and historical big data. To learn how it works, check out our technical white paper.

() (parentheses), (comma). (dot)/* */ (multiline comment)/** */ (doc comment)// (comment); (semicolon)= (assign)[] (array access){} (curly braces)catchclassdraw()exit()extendsfalsefinalimplementsimportloop()newnoLoop()nullpop()popStyle()privatepublicpush()pushStyle()redraw()returnsetLocation()setResizable()setTitle()setup()staticsuperthisthread()truetryvoid

Word Processing Cheat Sheet

cursor()delay()displayDensity()focusedframeCountframeRate()frameRatefullScreen()heightnoCursor()noSmooth()pixelDensity()pixelHeightpixelWidthsettings()size()smooth()width

Primitive

booleanbytecharcolordoublefloatintlongComposite

ArrayArrayListFloatDictFloatListHashMapIntDictIntListJSONArrayJSONObjectObjectStringStringDictStringListTableTableRowXMLConversion

binary()boolean()byte()char()float()hex()int()str()unbinary()unhex()String Functions

join()match()matchAll()nf()nfc()nfp()nfs()split()splitTokens()trim()Array Functions

append()arrayCopy()concat()expand()reverse()shorten()sort()splice()subset()Processing Cheat Sheet

Relational Operators

!= (inequality)< (less than)<= (less than or equal to) (equality)> (greater than)>= (greater than or equal to)Iteration

forwhileConditionals

?: (conditional)breakcasecontinuedefaultelseifswitchLogical Operators

! (logical NOT)&& (logical AND)|| (logical OR)createShape()loadShape()PShape

2D Primitives

arc()circle()ellipse()line()point()quad()rect()square()triangle()Curves

bezier()bezierDetail()bezierPoint()bezierTangent()curve()curveDetail()curvePoint()curveTangent()curveTightness()3D Primitives

box()sphere()sphereDetail()Attributes

ellipseMode()rectMode()strokeCap()strokeJoin()strokeWeight()Vertex

beginContour()beginShape()bezierVertex()curveVertex()endContour()endShape()quadraticVertex()vertex()Loading & Displaying

shape()shapeMode()Mouse

mouseButtonmouseClicked()mouseDragged()mouseMoved()mousePressed()mousePressedmouseReleased()mouseWheel()mouseXmouseYpmouseXpmouseYKeyboard

keykeyCodekeyPressed()keyPressedkeyReleased()keyTyped()Files

BufferedReadercreateInput()createReader()launch()loadBytes()loadJSONArray()loadJSONObject()loadStrings()loadTable()loadXML()parseJSONArray()parseJSONObject()parseXML()selectFolder()selectInput()Time & Date

day()hour()millis()minute()month()second()year()Signal Processing Cheat Sheet

Text Area

print()printArray()println()Image

save()saveFrame()Files

beginRaw()beginRecord()createOutput()createWriter()endRaw()endRecord()PrintWritersaveBytes()saveJSONArray()saveJSONObject()saveStream()saveStrings()saveTable()saveXML()selectOutput()applyMatrix()popMatrix()printMatrix()pushMatrix()resetMatrix()rotate()rotateX()rotateY()rotateZ()scale()shearX()shearY()translate()

Signal Processing For Dummies

Lights

ambientLight()directionalLight()lightFalloff()lights()lightSpecular()noLights()normal()pointLight()spotLight()Camera

beginCamera()camera()endCamera()frustum()ortho()perspective()printCamera()printProjection()Coordinates

modelX()modelY()modelZ()screenX()screenY()screenZ()Material Properties

ambient()emissive()shininess()specular()Loan Processing Cheat Sheet

Setting

background()clear()colorMode()fill()noFill()noStroke()stroke()Creating & Reading

alpha()blue()brightness()color()green()hue()lerpColor()red()saturation()Sterile Processing Cheat Sheet

createImage()PImage

Loading & Displaying

image()imageMode()loadImage()noTint()requestImage()tint()Textures

texture()textureMode()textureWrap()Pixels

blend()copy()filter()get()loadPixels()pixels[]set()updatePixels()

blendMode()clip()createGraphics()hint()noClip()PGraphics

Shaders

loadShader()PShaderresetShader()shader()PFont

Loading & Displaying

createFont()loadFont()text()textFont()Attributes

textAlign()textLeading()textMode()textSize()textWidth()Metrics

textAscent()textDescent()PVector

Operators

% (modulo)* (multiply)*= (multiply assign)+ (addition)++ (increment)+= (add assign)- (minus)-- (decrement)-= (subtract assign)/ (divide)/= (divide assign)Bitwise Operators

& (bitwise AND)<< (left shift)>> (right shift)| (bitwise OR)Calculation

abs()ceil()constrain()dist()exp()floor()lerp()log()mag()map()max()min()norm()pow()round()sq()sqrt()Trigonometry

acos()asin()atan()atan2()cos()degrees()radians()sin()tan()Random

noise()noiseDetail()noiseSeed()random()randomGaussian()randomSeed()